This article explains the well-known LOF-algorithm. We provide intuition for density-based outlier detection, show the problems inherent to this task and then take a look at how LOF solves these problems.

Motivation

Outlier detection is important for many real world applications - typical examples include fraud detection, network intrusion detection or the detection of faulty sensor data. Although there is no "real" definition of what constitutes an outlier, the well-established definition is due to 1:

[...] an observation that deviates so much from other observations as to arouse suspicion that it was generated by a different mechanism.

How does one go about finding these observations? The literature distinguishes different models to detect outliers 2: extreme values, clustering models, distance-based models, density-based models, probabilistic models, and information theoretic models. For our purposes, distance-based and density-based models are the most relevant. Note that both are closely linked.

Intuition

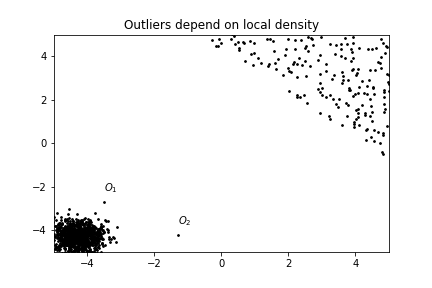

The original paper 3 introduced the local outlier factor (LOF) as a density-based model. Going with the disambiguation from 2, this means that "the local density of a data point is used to define its outlier score". Let's visualize this:

Looking at the figure, we expect and to have the highest outlier score - even though has the same distance from the cluster in the bottom left as all the points have to each other in the cluster on the top right.

The idea of LOF is to look at the neighborhood of each point individually and to compute its "outlierness" based on its neighbors. To this end, one quantifies the difference of the density of the point to the density of its neighboring points. Put more bluntly: Imagine living in a villa in central Manhattan - then you are probably an outlier because Manhattan is a high-density area but you are in a less dense spot. Now, imagine living a rural area. Here you are also in a less dense spot but so are all of your neighbors and you are not an outlier.

Formal Definition

Let's get to the definitions of the paper and see how they map to our intuition of density-based outlier detection. All of the following definitions are repeated in their original form from 3. Note that refers to the full data set.

k-distance of an object p: For any positive integer , the -distance of object , denoted as k-distance(p) is defined as the distance between and an object such that:

- for at least objects it holds that , and

- for at most objects it holds that .

Put differently, the k-distance of an object is the distance to the k-th nearest object. Or, starting from an object , we compute the distance to all objects , sort them in increasing order and take the k-th element of this list.

k-distance neighborhood of an object p: Given the k-distance of , the k-distance neighborhood of p contains every object whose distance from is not greater than the k-distance, i.e., .

Let's illustrate this with an example. Suppose we have five data point in our data set and we are interested in the 3-distance of as well as its neighborhood. We compute for all and order the results. Assume these are the distances:

Then our 3-distance is 0.5 and there are three objects in the neighborhood.

In Euclidian space, think of this as putting a (hyper-)sphere with its center at and increasing the radius until k elements are in it. As distances can be equal there might be more than k elements in the circle.

reachability distance of an object p w.r.t. object o: Let be a natural number. The reachability distance of object w.r.t. is defined as

This is used to smooth the density estimation: For objects far away from each other, their distance is the actual distance. For objects close to each other, their distance is their k-distance.

Please note that the reach-distance is not a proper distance measure as is not symmetric.

local reachability density of an object p: The local reachability density of p is defined as

Here, MinPts "replaces" parameter k and we look at the MinPts-neighborhood of p.

In general, a density is mass per volume, i.e., this definition implicitly assumes each element in the neighborhood of p to have unit mass. "The volume" is given by the sum of all reachability-distances of the element in the neighborhood of a point.

With these preliminaries done, we can finally define the local outlier factor:

(local) outlier factor of an object p: The local outlier factor of p is defined as

We can actually rewrite this formula slightly,

so the local outlier factor of a point is given by the density of its neighboring points, times the sum of the reach-distance to these points.

Based on this last formula, we see that a point has a large local outlier factor if its neighbors are in dense areas (then the first sum becomes large) and if these points are far away, i.e., the point has no direct neighbors. It turns out that this notion captures the locality aspect of outlier detection surprisingly well.

Discussion

The main algorithmic difficulty of LOF is finding the neighbors of each point in the data set. The scikit-learn implementation mitigates this problem by constructing a Ball Tree or a kd-tree. To speed up the computation further, one could use a more fuzzy approach: Instead of of looking at a specific neighborhood one looks at a so-called " -neighborhood" and thus allows a controllable error. See, e.g., 4.

The main difficulty from a user's point of view is setting the parameter k appropriately - the best value of k depends on the data set and the algorithm is very sensitive with regard to its parameter.

References

-

D Hawkins. Identification of Outliers. Springer Netherlands, 1980. ↩

-

C C Aggarwal. Outlier Analysis. Springer International Publishing, 2nd ed., 2017. ↩↩

-

M M Breunig et al. LOF: identifying density-based local outliers. Proceedings of the 2000 ACM SIGMOD international conference on Management of data (New York, NY, USA, May.-2000), 93–104. ↩↩

-

P Ram and K Sinha. Revisiting kd-tree for Nearest Neighbor Search. Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (Anchorage AK USA, Jul.-2019), 1378–1388. ↩